What Is Computer Vision and How It Helps Businesses: iOS Use Case

Drawing from my firsthand experience in the tech world, I’ve always been intrigued by the vast potential of computer vision, especially its transformative power when infused with the Apple ecosystem — more precisely, iOS.

Apple, a trailblazer in innovation, has consistently integrated advanced features into its products, and its venture into computer vision is no exception. With its sophisticated hardware-software synergy and vast user base, the iOS platform offers a fertile ground for businesses to harness computer vision’s power, creating more intelligent, user-friendly, and ultimately transformative applications.

In this article, we will explore the intricacies of computer vision, its benefits, and real-world applications. Specifically, I’ll spotlight my journey of integrating it within the iOS app while working closely with one of DashDevs’ esteemed clients.

What is Computer Vision?

Computer Vision, frequently shortened to CV, emerges at the crossroads of artificial intelligence (AI) and image processing. The main goal is to equip machines with the capability to both analyze images and act upon visual information, akin to how humans employ their sight and cognitive faculties to comprehend visual signals from their surroundings.

Vision algorithms detect objects in still images. Source: Apple Vision documentation

Having been hands-on with various projects, I’ve come to appreciate the depth of what Computer Vision seeks to emulate — the very essence of human vision and sight. The field is vast, touching upon various facets like:

- Image Classification: Determining what an image contains. For example, discerning if a photograph contains a cat or a dog.

- Object Detection: Identifying multiple objects in an image and their locations.

- Facial Recognition: Recognizing and distinguishing individual faces.

- Scene Reconstruction: Creating a 3D model of a scene from a set of 2D images.

- Image Segmentation: Partitioning an image into multiple segments, each corresponding to a different object or part of an object.

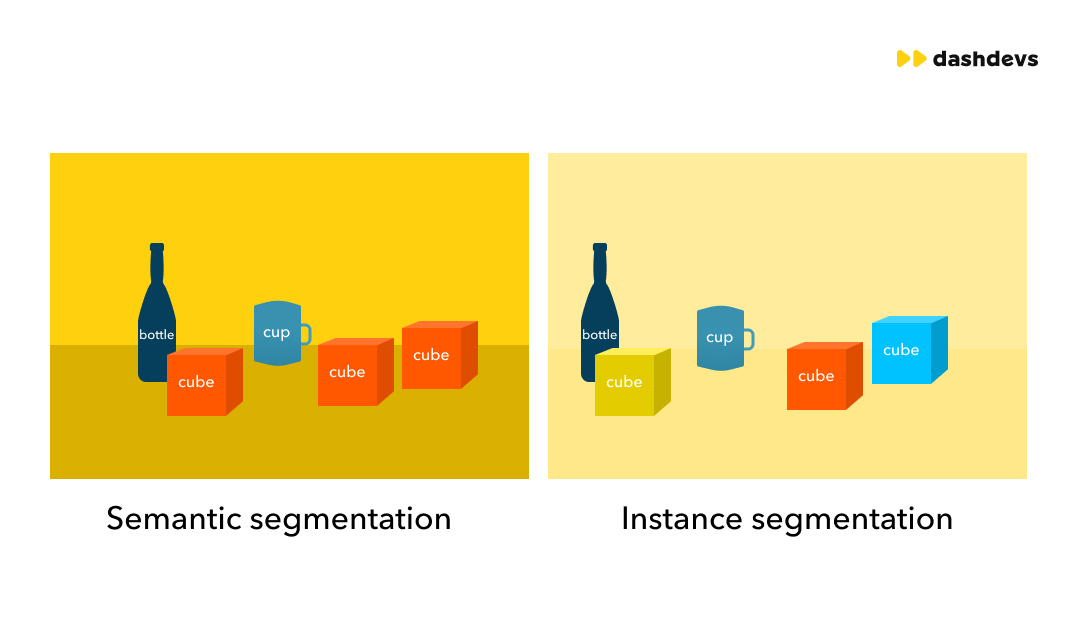

If we are talking about the last point, image segmentation, there are two types of it — instance segmentation and semantic segmentation.

In instance segmentation, the goal is to distinguish individual instances of objects within the image. For instance, if an image has two cars, this process would separate each car into its own unique segment, even though both belong to the same object class of “cars.”

On the other hand, semantic segmentation categorizes every pixel in the image based on the class or category to which they belong, such as ‘car,’ ’tree,’ ‘house,’ and so on. Unlike instance segmentation, semantic segmentation doesn’t differentiate between individual instances of the same class. Therefore, in the case of an image with two cars, semantic segmentation would label all pixels corresponding to both cars simply as “car” without distinguishing between the two vehicles.

How Computer Vision Systems Perceive and Interpret Visual Information

Contrary to our organic, holistic way of viewing the world, computers perceive things differently. Instead of a cohesive scene, they see a mosaic of pixel values. Having been a part of numerous projects, I’ve watched in awe as machines, through ingenious algorithms, discern patterns, contours, hues, and textures.

For instance, imagine teaching a computer to detect the edge of a table. To us, it’s an obvious boundary, but to a computer? It’s a puzzle solved using edge detection algorithms, discerning one item from another, pixel by pixel.

Apple, with its constant endeavor to simplify complex technologies for developers, has rolled out a Vision framework to facilitate the integration of CV into iOS apps. Whether you’re looking to detect faces, read barcodes, or track objects in videos, the Vision framework provides tools and APIs to make these tasks simpler for developers.

Real-world Applications of iOS Computer Vision in Various Industries

The integration of computer vision into the iOS ecosystem has not only added a layer of sophistication to apps but has also introduced transformative solutions across various industries. Let’s delve into the myriad applications this technology enhances, reinventing how businesses operate and serve customers.

Vision algorithms identify objects in real-time video. Source: Apple Vision documentation

Healthcare

Disease Detection: iOS apps can now process medical images, identifying signs of diseases like tumors or retinal damage, often with an accuracy comparable to medical professionals.

Medical Image Analysis: Detailed analysis of X-rays, MRIs, and other diagnostic images is made simpler and more efficient, facilitating quicker diagnoses and treatment plans.

Patient Monitoring: Using computer vision, apps can monitor patients’ physical movements, detecting anomalies or emergencies and sending real-time alerts to medical staff.

Retail

Automated Checkouts: With the help of computer vision, iOS apps allow users to scan products and check out seamlessly, reducing queues and enhancing the shopping experience.

Inventory Management: Stores can automatically track inventory, identifying when stocks are low or when products are misplaced.

Customer Insights: By analyzing customers’ in-store movements and interactions with products, retailers can gain invaluable insights into shopping behaviors and preferences.

Agriculture

Crop Health Monitoring: Drones equipped with iOS devices can capture vast farmland images, with computer vision algorithms analyzing these digital images, to detect crop diseases or pest infestations.

Precision Farming: Farmers can optimize the use of water, fertilizers, and pesticides by analyzing data from computer vision systems, ensuring higher yields and sustainable farming.

Automotive

Autonomous Vehicles: While fully autonomous cars are still in the development phase, computer vision plays a crucial role in self-driving cars with obstacle detection, lane departure warnings, and parking assistance in semi-autonomous vehicles.

Driver Assistance Systems: By processing real-time visuals, iOS apps can alert drivers about potential collisions, pedestrians, or unsafe driving conditions.

Real Estate

Virtual Property Tours: Prospective buyers or renters can take virtual tours of properties using iOS devices, with computer vision enhancing the realism and interactivity of these tours.

Predictive Maintenance: By analyzing visuals of properties, a computer vision system can predict when parts of a property might require maintenance or repairs.

Others

Entertainment: In gaming and entertainment apps, computer vision can track users’ movements and facial expressions, enhancing interactivity and immersion.

Finance: Banks and financial institutions can use computer vision for document verification, fraud detection, and customer onboarding through facial recognition.

Manufacturing: Computer vision can identify defects in products on assembly lines, ensure quality control, and optimize inventory management.

Seeing firsthand the proliferation of how computer vision is used in iOS applications across this wide spectrum of industries, I’m more convinced than ever of its potential. Whether it’s optimizing existing processes or introducing novel solutions, the technology promises a future where businesses operate with heightened efficiency, accuracy, and user-centricity.

Case Study: How We Implemented Computer Vision Technology in iOS App for Logistics Companies

Having worked closely with Alko Prevent, a frontrunner in AI-powered alcohol monitoring, I’ve gained a unique perspective on the challenges and solutions in this domain. Let me take you through our collaborative journey.

The mission of Alko Prevent revolves around offering innovative solutions to businesses ensuring safety by preventing alcohol-related accidents among employees, passengers, and freight. With an array of products equipped with facial recognition, Alko Prevent stands firm against alcohol-induced workplace incidents.

Alko Prevent approached DashDevs, recognizing our expertise in crafting sophisticated mobile applications. Their objective was crystal clear: to develop an iOS solution that could seamlessly integrate with their AI-powered system for detecting secondary signs of alcohol intoxication.

Taking on this challenge, our team conducted rigorous research. We delved deep into understanding the nuances of alcohol intoxication, how it manifests externally, and the intricacies of integrating alcohol intoxication recognition into a mobile platform. Our brainstorming sessions were intense, often stretching late into the night.

As a result, we proposed using Computer Vision technology to solve the task the client shared with us. Already then, we understood that this technology would help the client provide exceptional value to the logistic market.

The Challenge: Dangers of Alcohol Intoxication among Drivers

Alcohol intoxication is a significant peril in the logistics industry. According to the research of our client, a single mishap can lead to damages upwards of 300,000 EUR, not accounting for indirect repercussions. The gravity of this issue, compounded by potential non-compensation scenarios for drunk driving incidents, necessitated a cutting-edge, preventative solution.

From the onset, we were faced with a series of challenges. Ensuring the software maintained the same high level of accuracy as their stationary devices. Any reduction in precision could compromise user safety and the integrity of Alko Prevent’s brand.

Solution Development and Implementation: Using Apple’s Vision Framework

In an era where technology continually redefines the bounds of the possible, imagine harnessing the power of your mobile device to detect subtle signs of alcohol intoxication! At the beginning of the project, it sounded like the stuff of sci-fi movies for me. Yet, here we are, turning this awe-inspiring vision into a tangible reality. That was fascinating!

After Alko Prevent entrusted us with developing an iOS solution, we began an extensive evaluation of the available frameworks suitable for this initiative. Our objective was to identify a tool that not only provided excellent facial recognition capabilities but also integrated seamlessly with iOS.

Among the myriad of Computer Vision solutions for iOS, the most prominent ones we considered were:

- Apple Vision: Apple’s proprietary framework for computer vision tasks.

- CoreML with custom models: Apple’s machine learning framework that allows developers to design and integrate custom-trained models.

- OpenCV for iOS: A versatile, open-source computer vision library with wide-ranging capabilities.

- TensorFlow Lite: Google’s lightweight solution for mobile and embedded devices, tailored for on-device machine learning inference with a small binary size.

After extensive deliberation, we opted for Apple Vision for the following reasons:

- Native Integration: Being an Apple framework, Vision offers seamless integration with the iOS ecosystem. This ensures optimal performance, stability, and efficient power consumption.

- Accuracy and Speed: Apple Vision has been tailored for iOS devices, ensuring real-time and high-accuracy results, crucial for the timely detection of alcohol intoxication signs.

- Ease of Development: With comprehensive documentation and support from Apple, the development process using the Vision framework was straightforward. This allowed for a shorter development cycle, ensuring the product reached the market in a timely manner.

- Continuous Updates: As Apple’s proprietary product, Vision is regularly updated to incorporate the latest advancements in computer vision technology, ensuring that the application remains at the forefront of facial recognition capabilities.

While other solutions, like OpenCV or TensorFlow Lite, offer extensive capabilities and flexibility, they may not provide the same level of native optimization and privacy assurance that Apple Vision does. The choice became evident — to deliver the best experience for iOS users while upholding the core values of accuracy and privacy, Apple Vision was the ideal framework for our project with Alko Prevent.

We capitalized on Apple’s Vision framework, renowned for its deep learning models and adeptness at understanding images and videos. The solution comprised several nuanced tests:

Blink Rate Analysis: Using the image analysis features of Vision, the application discerns the blink rate, associating slower blinks with potential intoxication.

Identity Confirmation: The application verifies the user’s identity throughout the tests. Users are instructed to turn their heads and blink. An on-screen frame maintains its position, and any deviation restarts the test.

We’ve also utilized other frameworks like ARKit and Speech framework to make the app’s functionality even more advantageous. Here is how these frameworks helped us to enhance our solution:

Augmented Reality Walking Test: Users draw a line and attempt to walk along it. Deviations, identified by a gyroscope, hint at possible intoxication.

Descriptive Test: Users narrate a displayed image. Speech-to-text conversion aids in the analysis of speech speed and clarity.

Pulse Measurement: A user places their finger on the camera while the device’s torch is activated. The illumination allows the application to capture blood flow frequency, deducing heart rate from it. An elevated pulse can sometimes signal alcohol ingestion.

Working hand-in-hand with Alko Prevent’s team, we fine-tuned the AI algorithms to optimize performance on the iOS platform, ensuring that the detection process was both rapid and accurate. A rigorous testing phase followed, where real-world scenarios were simulated to validate the solution’s reliability.

With the combined power of Apple’s Vision framework and the Drugger device, our iOS application stands as one of the most advanced tools for alcohol intoxication detection available today.

Results & Feedback

Quantifiable Improvements:

With Vision’s precise detection, there were:

- 52% reduction in incidents.

- 73% rise in driver self-monitoring.

- Over 95% accuracy in intoxication detection.

Vision’s prowess was applauded for being a “technological marvel,” drastically reducing risk. Employees from different logistic companies, over time, viewed the system as a personal safety net.

To sum up, Apple’s Vision framework has demonstrated its exceptional capability to address real-world challenges in our project. Through its incorporation, Alko Prevent has successfully ushered in an era of enhanced safety and responsibility in the logistics sector.

Why Choose Apple Vision Framework Among Others?

Within an expansive domain of computer vision, Apple’s iOS emerges as a beacon of innovation and practical application. With its hallmark blend of cutting-edge hardware and finely-tuned software, the iOS platform presents unparalleled opportunities for the integration of computer vision. Here are the benefits of building your Computer Vision project using the Vision framework by Apple:

Powerful Processing: Apple’s hardware, from its custom-designed chips like the A-series and M-series to its GPUs, is optimized for high-performance tasks, including computer vision. This ensures that CV-based applications run smoothly, providing users with real-time responses.

On-device Processing: One of Apple’s defining features is its commitment to user data privacy. Many CV tasks on iOS can be performed directly on the device, ensuring that sensitive visual data doesn’t need to be transmitted to external servers.

Secure Enclaves: Apple devices come equipped with secure enclaves that ensure sensitive data, like facial recognition data, is stored securely and remains inaccessible to potential breaches.

iCloud Integration: Visual data processed through computer vision can be stored and synced across devices using iCloud, offering users a seamless experience.

ARKit Synergy: Computer vision complements Augmented Reality. By integrating CV with ARKit, Apple’s AR development platform, apps can deliver more immersive and interactive experiences.

Seamless Integration Across Devices: An app developed for the iPhone can easily be optimized for other devices in the Apple ecosystem, like the iPad and Mac. This offers users a consistent experience, whether they’re switching devices or using them simultaneously.

Rich Resources: Apple provides developers with a plethora of documentation, sample code, and tutorials, enabling even those new to computer vision to get started efficiently.

Vibrant Community: Apple’s developer community is one of the most active and supportive. Challenges faced during development can often be addressed with the collective wisdom of thousands of developers worldwide.

In conclusion, the fusion of computer vision with the iOS platform offers businesses a strategic advantage. From enhanced user experience and privacy to the vast support system available for developers, the integration promises not just innovation but also efficiency and trustworthiness.

Limitations and Considerations

As we delve deeper into the world of Computer Vision (CV) within the iOS ecosystem, it’s essential to balance the excitement and promise with a pragmatic understanding of the limitations and considerations that come with this integration. Every technological advancement, no matter how profound, comes with its set of challenges, and CV on iOS is no exception. Here’s a comprehensive look:

iOS-only Audience: By focusing solely on the iOS platform, businesses might miss out on engaging with a broader audience that uses other platforms, such as Android. This could limit the market reach and potential user base.

If you need to build an app both for Android and iOS, consider OpenCV or TensorFlow. OpenCV is an open-source CV library that works on both Android & iOS. But beware, this framework can be tricky for beginners because it requires more setup than platform-specific solutions. TensorFlow Lite is also a cross-platform and lightweight solution, but it needs you to understand ML training.

Potential Higher Development Cost: Native iOS app development, especially one integrating advanced features like CV, might be more costly in terms of both time and resources compared to more general solutions or cross-platform development.

Continuous Updates: Apple is known for its frequent software updates. Developers need to ensure that their apps, especially those using advanced capabilities like CV, remain compatible with the latest iOS versions. This might require regular app updates, testing, and potential reiterations.

Hardware Limitations: While newer Apple devices come packed with advanced chips optimized for tasks like CV, older devices might not offer the same level of performance. This could lead to varied user experiences based on the device being used.

Complexity of Advanced CV Tasks: Implementing advanced CV functionalities might be challenging and might require specialized expertise. Not all developers familiar with iOS development will necessarily be adept at integrating and optimizing CV capabilities.

Data Privacy and Ethical Considerations: Even with on-device processing, businesses must handle visual data responsibly, ensuring they maintain user trust and comply with data protection regulations.

Bias and Fairness: Like all machine learning models, CV models can be susceptible to biases based on the data they’re trained on. This can lead to skewed or discriminatory outputs, which can be problematic both ethically and practically.

Reliance on Apple’s Ecosystem: By deeply integrating into the iOS platform, businesses may find themselves heavily reliant on Apple’s ecosystem. Any significant changes or shifts in Apple’s policies, hardware, or software strategies could impact these businesses.

How to Get Started with Computer Vision Applications on iOS

You know, when I first thought about incorporating Computer Vision (CV) into iOS, it felt a bit like scaling a technical mountain. But, as with all daunting tasks, a step-by-step approach clears the mist. Here’s my guide to navigating the initial stages of integrating computer vision into your iOS applications:

1. Understand Business Needs

Identify Objectives: Begin by outlining what you aim to achieve with computer vision. Are you looking to enhance user experience, automate certain functions, or introduce a revolutionary feature?

Assess the Impact: Evaluate how integrating CV will affect your existing app functionalities, user experience, and overall business model.

2. Choose the Right Tools

Apple’s Native Frameworks: Familiarize yourself with Apple’s Vision tool. Explore a range of functionalities this framework provides, and evaluate if it covers all your needs.

Third-party Libraries: There are several powerful third-party CV libraries that can be integrated with iOS apps. Research options like OpenCV, TensorFlow, or Turi Create to determine if they fit your needs.

Evaluate the Trade-offs: While native tools offer seamless integration and optimization, third-party libraries might provide broader functionalities or specific features not available in Apple’s offerings.

3. Hire the Team

Specialized Expertise: CV on iOS requires a unique blend of skills. Consider hiring iOS developers with experience in computer vision or training your existing team.

Collaborate: It might be beneficial to collaborate with data scientists or machine learning experts if your CV objectives involve creating or fine-tuning custom models.

Here, at DashDevs, our experienced developers have already navigated the pitfalls and challenges of computer vision projects. Our expertise ensures a smoother development process, avoiding the costly mistakes beginners often make. Feel free to contact us to talk about your Computer Vision project idea in detail.

4. Consider Iterative Development

Start with an MVP (Minimum Viable Product): Before fully integrating CV into your app, develop a minimal version that incorporates the essential CV features. This allows you to test the waters, gather user feedback, and understand potential challenges. When it comes to machine learning solutions, an MVP often looks like a basic demo of a solution. It might not yet be fully trained or optimized for a real-life dataset on which it must perform in actual scenarios.

Feedback Loop: Regularly collect feedback from users, developers, and stakeholders. This feedback will be crucial in refining and optimizing your CV functionalities. In the context of machine learning, this feedback can also inform adjustments in model training and data preparation.

Continuous Improvement: Computer vision is a rapidly evolving field. Stay updated with advancements, and be prepared to iterate and improve your app functionalities accordingly. This is especially true for machine learning models, which can benefit from continuous training on new data and refining based on the latest research and best practices.

5. Testing and Validation

Diverse Scenarios: Ensure you test your CV features under varied real-world conditions. This includes different lighting situations, angles, and potential obstructions.

Accuracy and Reliability: Focus on the accuracy of your CV outputs. Ensure that the results are consistent and reliable, which is crucial for user trust and a seamless user experience.

6. Ethical Considerations and User Trust

Transparent Communication: Clearly communicate to your users how visual data is processed and how image data is used. Emphasize on-device processing and data privacy to build trust.

Avoid Biases: Ensure that your CV models are trained on diverse datasets to avoid biases and ensure fairness in outputs.

7. Stay Updated

Keep Learning: The world of computer vision is dynamic. Regularly update your knowledge by attending workshops, conferences, or online courses.

Engage with the Community: Join forums, online communities, or developer groups focused on CV on iOS. Engaging with peers can provide invaluable insights, tips, and solutions to challenges.

Embarking on the journey of integrating Computer Vision into your iOS app is undoubtedly challenging but equally rewarding. With the right strategy, tools, and mindset, you can harness the potential of CV to enhance your app, delight your users, and carve a niche in the competitive app landscape.

Conclusion

Reflecting on my journey with Computer Vision, it’s clear to me that it’s more than just endowing machines with the gift of sight. It’s about understanding, interpreting, and making informed decisions based on visual inputs. With giants like Apple propelling the technology forward, the possibilities for its application are vast and exciting for businesses in very different industries.

Recognizing the immense value that experience brings, our development agency boasts a team of seasoned computer vision specialists. Our track record speaks for itself, having delivered successful projects across diverse sectors. By choosing our services, you’re not only investing in technology but also in a partnership dedicated to your success.

Isn’t it time you made the smart choice? Connect with us, and let’s shape the future together.